We are designing an automated system to apply tracking tags on living honeybees. This project will:

- Streamline data collection and study of beehives and individual bees

- Automate the arduous bee-tagging process for beekeepers

- Monitor certain behaviors within the desired hives

Skills: Motor controls, precise mechanisms, machine learning, computer vision

Bee Tagging is comprised of two sub-teams: Hardware and Software.

Hardware

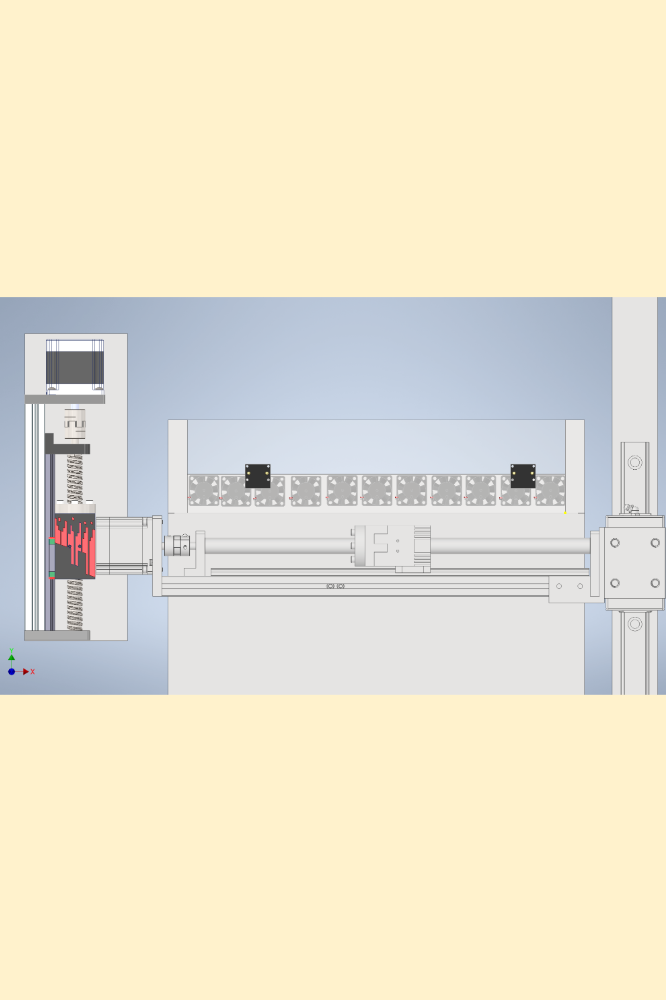

This team is responsible for creating all the mechanical and electrical systems needed. We use Autodesk Inventor/Fusion to CAD and Raspberry Pi to control our electrical components: Linear actuators, motors, and servos (mainly).

The system works as follows:

- Bees walk over a mesh surface

- Fans suck the bees down, trapping them in place

- The Tag Applicator moves to pick up a tag from the Tag Dispenser

- The Tag Applicator moves the tag to receive glue from the Glue Dispenser

- The Tag Applicator applies the tag to the bee’s thorax

- Repeat steps 3-5 for all bees on the mesh surface

- Turn off the fans to release the bees

Skills used: RASPBERRY PI, Fusion 360, motor control, showing up ._.

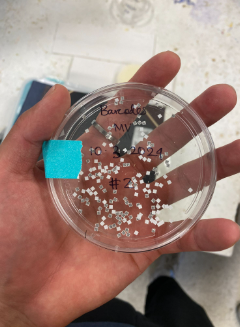

New Recruit Project: Tag Dispenser that dispenses 2mm square paper tags automatically. The tags must be right-side up (bonus points if they all point in the same direction). Minimize manual labor, so no stacking all the tags one by one (unless you want to do that thousands of times).

Propose some ideas for this in your application please!

Software

This team is responsible for designing the software that detects bees in the enclosed chamber and sends positional data to the actuators. Video feed from a camera module is sent to a Raspberry Pi where a machine learning model identifies and communicates coordinates to the actuators.

System process:

- The camera feed captures footage of the bee chamber and communicates it to the Raspberry Pi.

- The machine learning model identifies the bee and it’s thorax.

- The model places bounding boxes over both the bee and the thorax.

- The computes the coordinates of the bounding boxes and communicates with the actuator to move to the tagging location.

Skills used: Computer vision, machine learning, Raspberry Pi, Linux, and a willingness to learn!

Feb 2025 Update: At this time, all Bee Tagging Software positions have been filled. Keep an eye out for future opportunities down the road!

New Recruit Ideas: Given a fully labeled dataset of images with bounding boxes classifying bees and their individual thorax, explain how you would go about training and verifying a model to accurately identify the bees (e.g. the framework, training routine, and parameters). Feel free to include code/pseudocode to explain your thought process.

Here’s something else we’re currently thinking about: upon recognizing the bee and the thorax in the camera, we need to set up an algorithm that receives the positional data and relays it to the actuator. However, bees can change location erratically and flap their wings very quickly (~200 frames/second), challenging both software and hardware implementations. What are some ways we can mitigate this?

We’d love to see your ideas in your application!